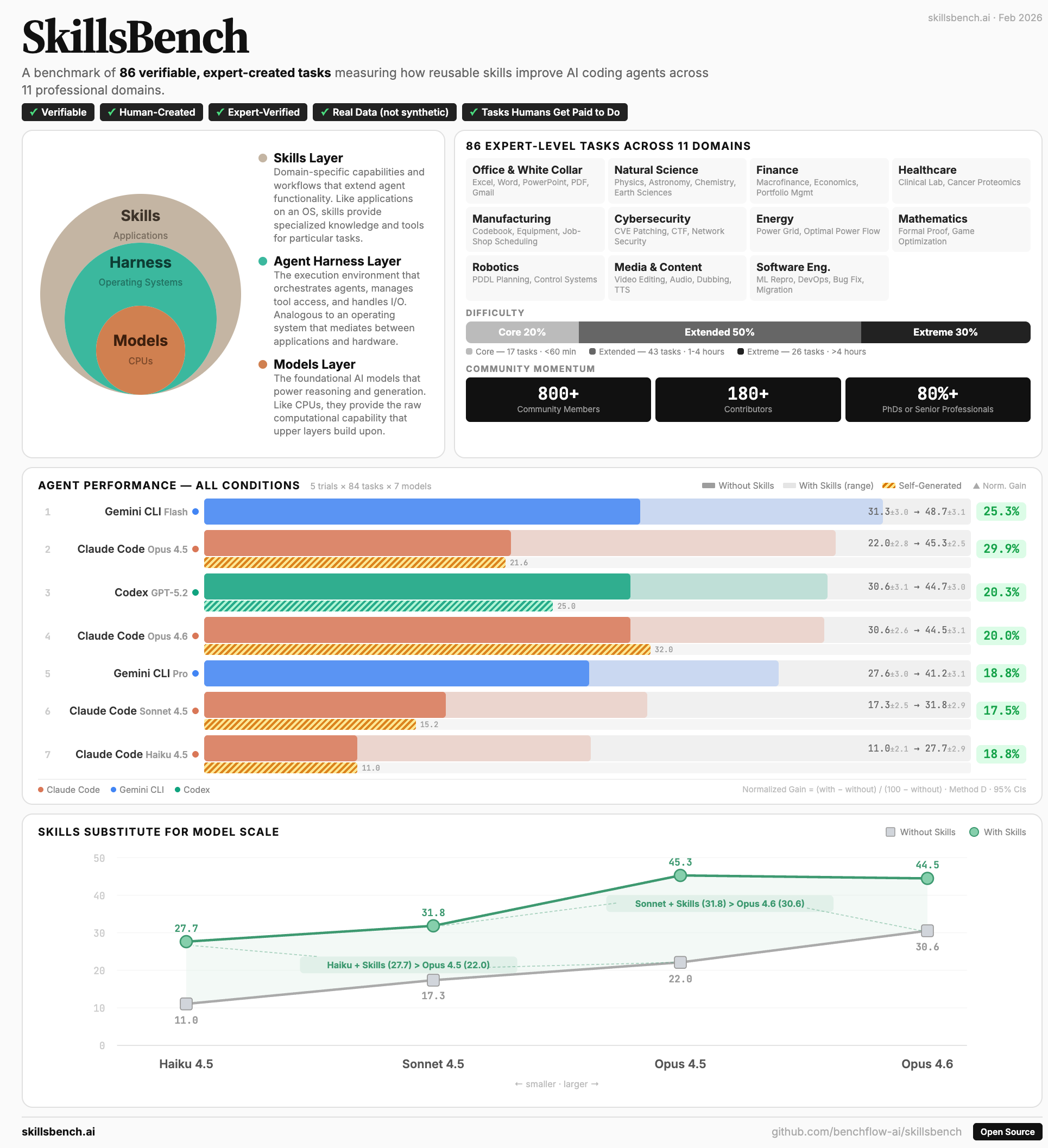

Today we are releasing SkillsBench, the first benchmark designed to systematically evaluate how Agent Skills improve AI agent performance. Across 86 tasks, 11 domains, and 7 agent-model configurations, we find that Skills provide substantial but highly variable benefit - and that when, how, and which Skills you use matters far more than simply having them.

Our paper, SkillsBench: Benchmarking Agent Skills for Frontier LLM Agents, accompanies this release.

The Problem: Agents Need Procedural Knowledge

Large language models have evolved from text generators into autonomous agents. CLI tools like Claude Code, Gemini CLI, and Codex enable developers to leverage frontier models as agentic assistants in the terminal. But a fundamental tension exists: foundation models provide broad capabilities yet lack the procedural knowledge required for domain-specific workflows. Fine-tuning is expensive and sacrifices generality.

Agent Skills offer an alternative. A Skill is a structured package - instructions, code templates, resources, and verification logic - that augments agent behavior at inference time without model modification. Skills encode procedural knowledge: standard operating procedures, domain conventions, and task-specific heuristics. Think of it as modular software for AI agents: foundation models are the CPU, agent harnesses are the OS, and Skills are the applications.

Community repositories now host thousands of user-contributed Skills spanning software engineering, data analysis, and enterprise workflows. Yet despite this proliferation, no benchmark has systematically evaluated how and when Skills improve agent performance, what content drives gains, or what design principles distinguish effective Skills from ineffective ones.

SkillsBench fills that gap.

The Benchmark

Task Suite: 86 Tasks Across 11 Domains

SkillsBench comprises 86 tasks spanning 11 domains, stratified by difficulty based on estimated human completion time:

| Difficulty | Tasks | Human Time |

|---|---|---|

| Core | 17 (20%) | < 60 min |

| Extended | 42 (49%) | 1-4 hours |

| Extreme | 26 (31%) | > 4 hours |

Tasks were contributed by 105 contributors from academia and industry, curated from 322 candidate submissions through a rigorous two-stage selection process including automated validation and human review.

Paired Evaluation Design

Unlike existing benchmarks that evaluate raw model capabilities in isolation, SkillsBench introduces a paired evaluation protocol: every task is evaluated under both vanilla (no Skills) and Skills-augmented conditions. This enables direct measurement of Skills efficacy - the delta between conditions - controlling for model capability, task difficulty, and evaluation infrastructure.

Rigorous Quality Control

Each task is a self-contained Docker container with:

- Instructions written by humans (verified via human review and GPTZero)

- Deterministic verifiers with programmatic assertions - no LLM-as-judge variance

- Leakage prevention via CI-based audits ensuring Skills provide guidance, not solutions

- Oracle solutions demonstrating resolvability

Key Findings

We evaluated 7 agent-model configurations across all 86 tasks with 5 trials per task. After excluding 2 tasks with runtime errors in their verifiers, we report results on 84 tasks, scoring with the Terminal-Bench Method D task-mean approach.

Skills provide substantial but variable benefit

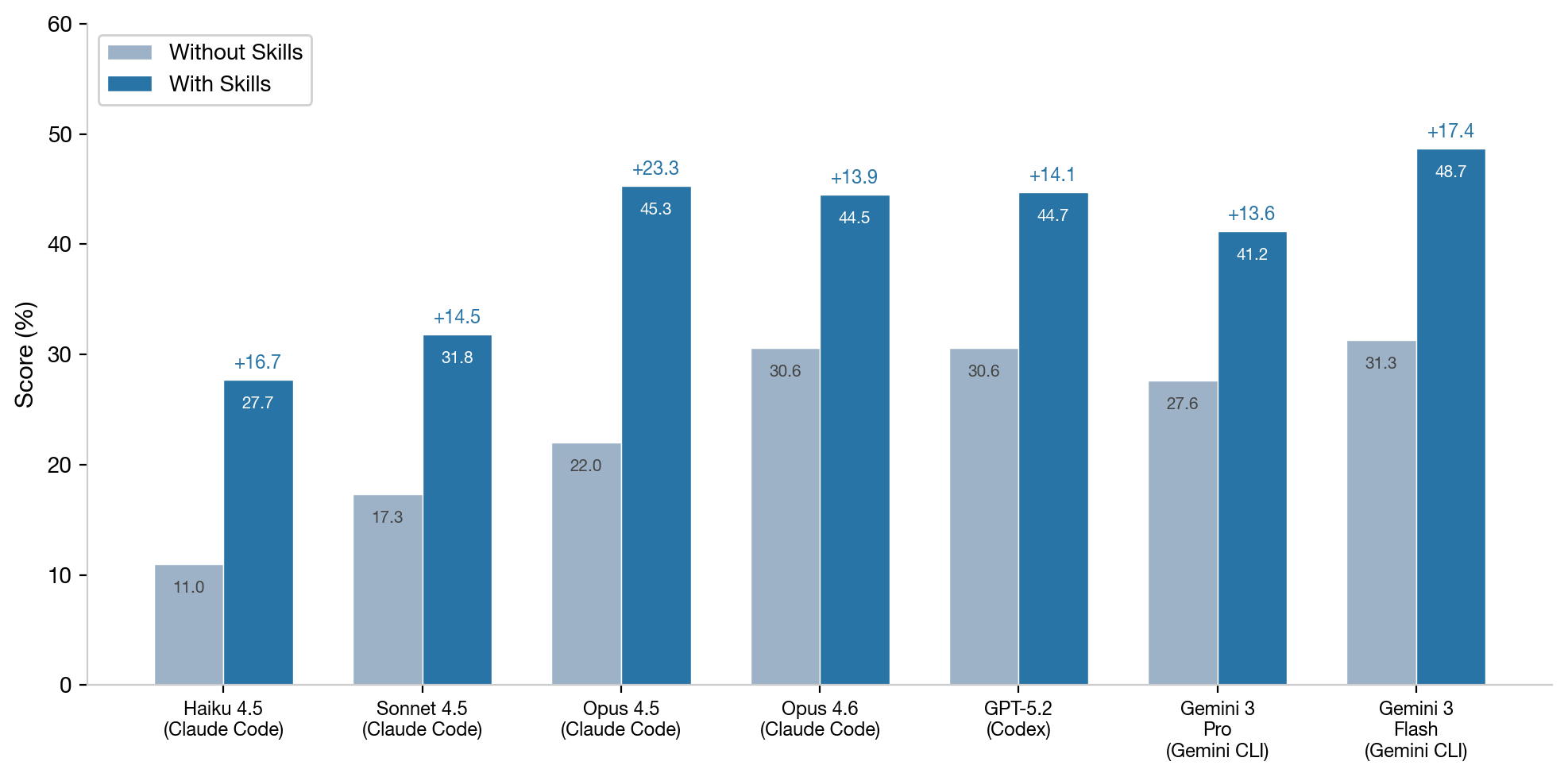

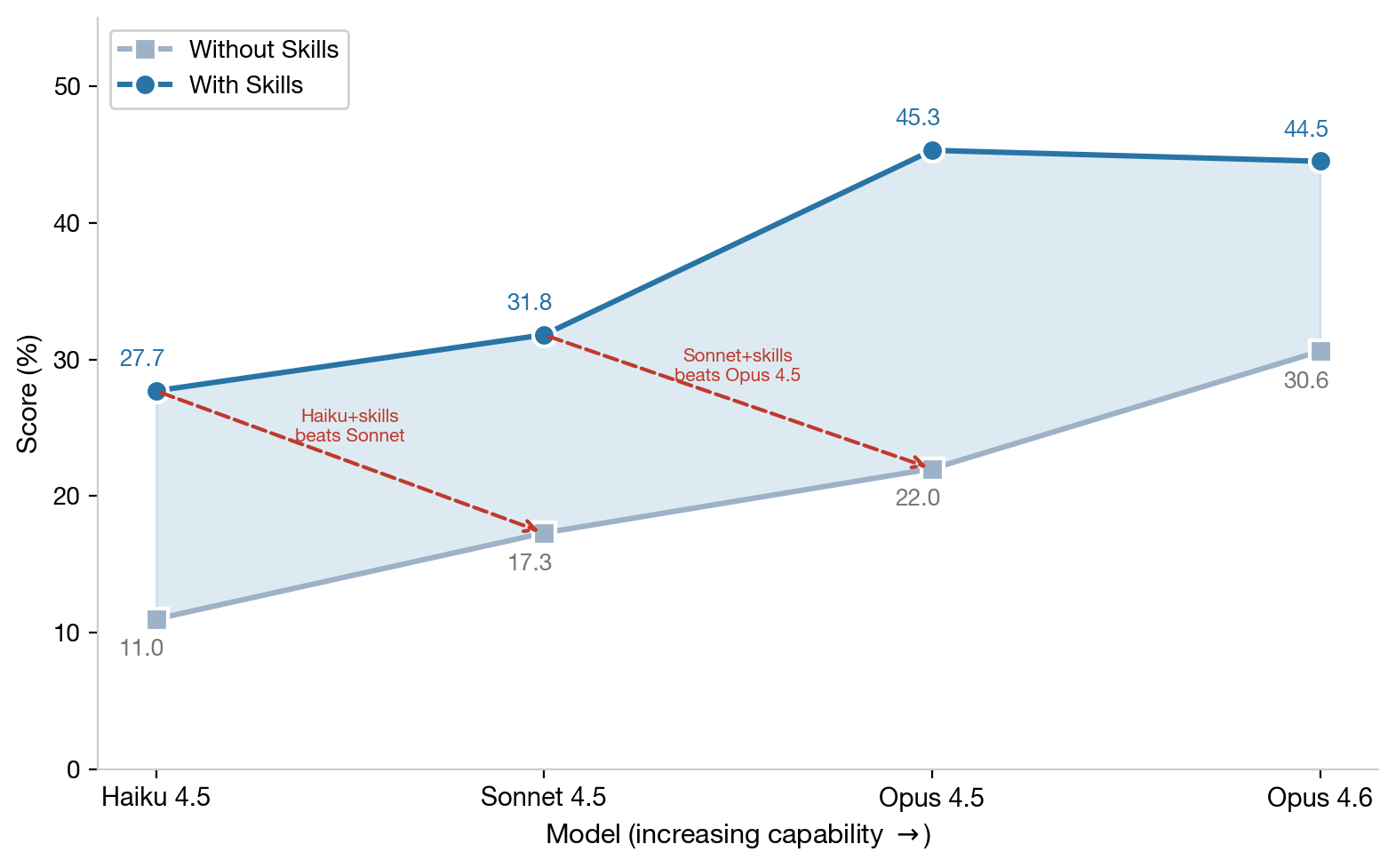

Skills improve performance across every model tested, but the magnitude varies significantly:

| Agent + Model | No Skills | With Skills | Uplift |

|---|---|---|---|

| Gemini CLI (Gemini 3 Flash) | 31.3 | 48.7 | +17.4 |

| Claude Code (Opus 4.5) | 22.0 | 45.3 | +23.3 |

| Codex (GPT-5.2) | 30.6 | 44.7 | +14.1 |

| Claude Code (Opus 4.6) | 30.6 | 44.5 | +13.9 |

| Gemini CLI (Gemini 3 Pro) | 27.6 | 41.2 | +13.6 |

| Claude Code (Sonnet 4.5) | 17.3 | 31.8 | +14.5 |

| Claude Code (Haiku 4.5) | 11.0 | 27.7 | +16.7 |

The absolute uplift ranges from +13.6 to +23.3 percentage points across configurations. Claude Code with Opus 4.5 shows the highest absolute gain (+23.3pp), reflecting Claude Code's native Skills integration optimized for the Agent Skills specification.

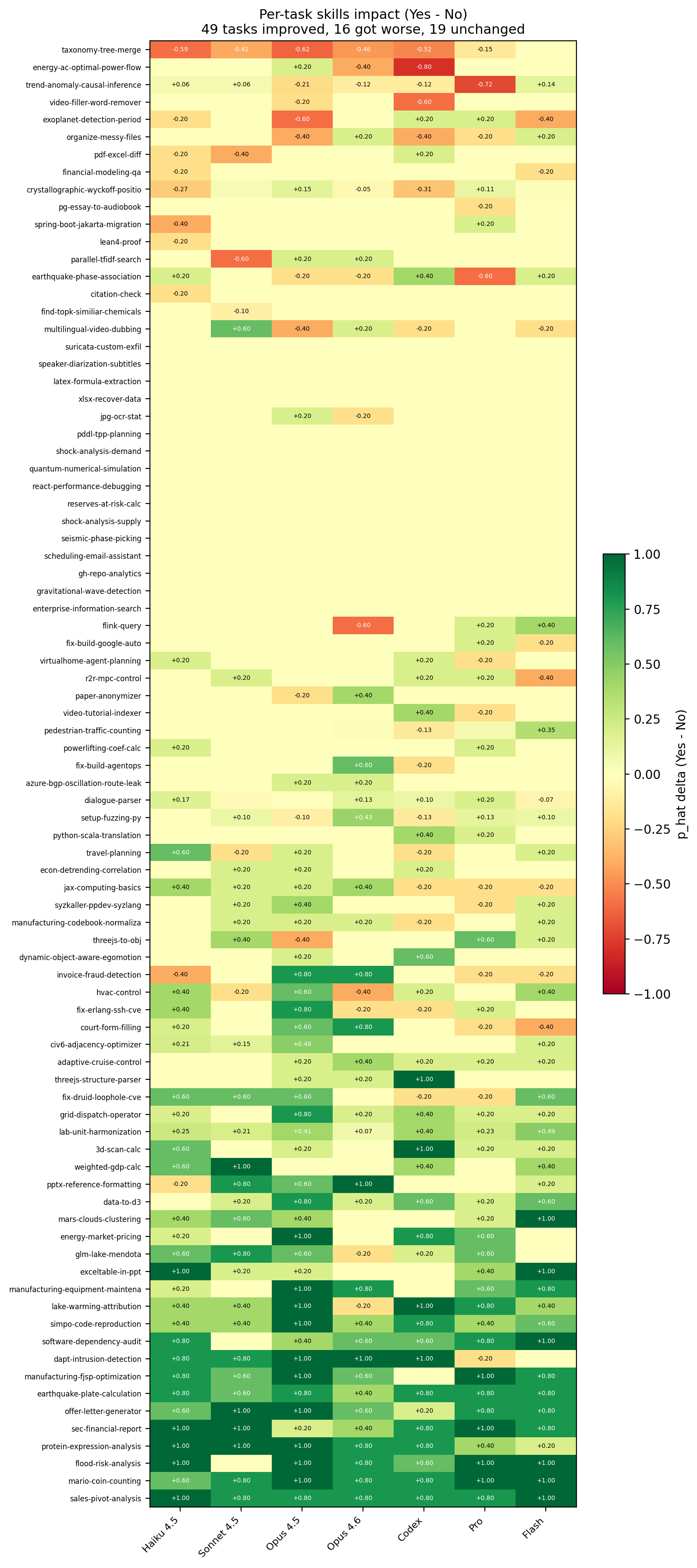

Skills can hurt performance in some domains

Skills don't uniformly help. While Healthcare (+51.9pp) and Manufacturing (+41.9pp) see massive gains, Software Engineering (+4.5pp) and Mathematics (+6.0pp) show minimal benefit. This suggests that for domains where models have strong pretraining coverage, external procedural guidance adds less value than for domains requiring specialized procedural knowledge underrepresented in training data.

At the task level, 16 of 84 evaluated tasks exhibit negative Skills effects. The worst cases include taxonomy-tree-merge (-39.3pp) and energy-ac-optimal-power-flow (-14.3pp). Blind Skills application is counterproductive - domain-specific deployment strategies are essential.

Less is more

Our ablation experiments reveal that Skills design matters enormously:

- 2-3 Skills per task provides optimal benefit (+20.0pp). Going to 4+ Skills shows diminishing returns (+5.2pp).

- Compact Skills (+18.9pp delta) outperform comprehensive documentation (+5.7pp) by nearly 4x. Agents struggle to extract relevant information from lengthy Skills content.

Focused, concise procedural guidance beats exhaustive documentation every time.

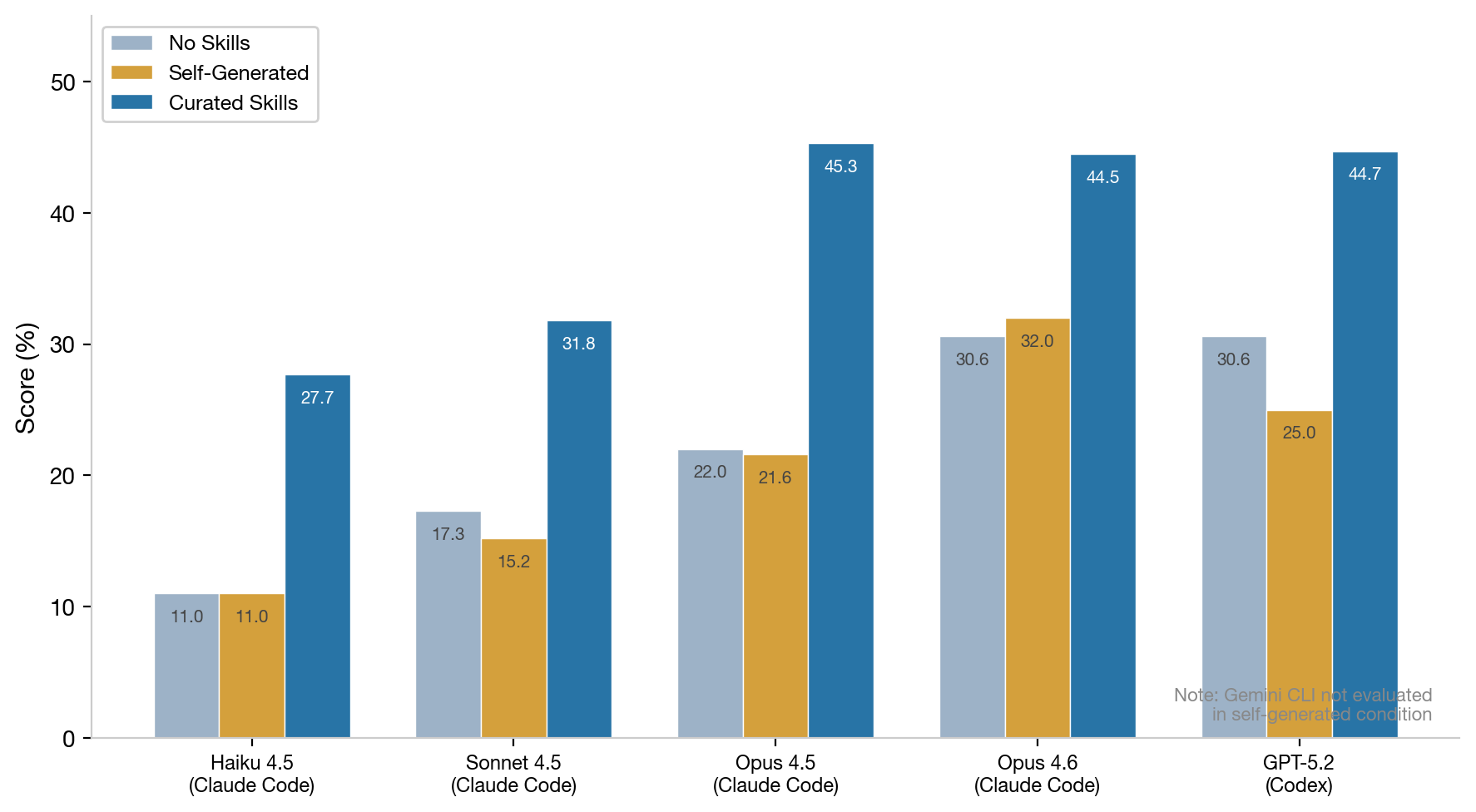

Self-generated Skills barely help

When prompted to generate their own Skills before solving tasks (the "Bring Your Own Skills" condition), models achieve only marginal improvement over the no-Skills baseline. Trajectory analysis reveals two failure modes:

- Models identify that domain-specific Skills are needed but generate imprecise procedures (e.g., "use pandas for data processing" without specific API patterns)

- For high-domain-knowledge tasks, models fail to recognize the need for specialized Skills entirely

Effective Skills require human-curated domain expertise that models cannot reliably self-generate.

Skills can substitute for model scale

Claude Haiku 4.5 with Skills (27.7%) substantially outperforms Opus 4.5 without Skills (22.0%). A smaller, cheaper model augmented with the right procedural knowledge can match or exceed a larger model operating without guidance. This has significant implications for cost-effective deployment.

Full Skills content is critical

Our Skills resolution ablation (L0 through L3) shows progressive improvement, with the largest gain coming from L2 to L3 - the addition of executable code examples and reference resources. When context is sufficient, full Skills (L3) provide a 45.5% pass rate compared to 16.4% without Skills. The procedural content, not just the presence of extra context, drives improvement.

What This Means

For practitioners

- Don't blindly apply Skills. Evaluate whether Skills help for your specific domain and use case. Some tasks show negative effects when Skills are applied.

- Keep Skills concise. A focused SKILL.md with 2-3 targeted procedures outperforms a comprehensive library.

- Include working examples. The biggest performance jump comes from adding executable code examples and reference resources.

- Consider smaller models. If you have good Skills, a smaller model may match a larger one at lower cost.

For researchers

- Paired evaluation is essential. Single-condition benchmarks miss the interaction between Skills and model capabilities. Always evaluate with and without Skills.

- Skills efficacy is context-dependent. It depends on the domain, model, harness implementation, and Skills design - not just whether Skills are present.

- Automatic Skills synthesis is an open problem. Models cannot reliably generate their own procedural knowledge, suggesting opportunities for research in Skills authoring and curation.

Explore SkillsBench

- Browse our Task Registry to see all 86 tasks across 11 domains

- Check the Leaderboard for detailed model comparisons

- Explore the Skills that drive performance improvements

- Read the Documentation to learn how to contribute tasks or run evaluations

SkillsBench and all evaluation infrastructure are fully open-source. We welcome contributions from the community - whether new tasks, improved Skills, or novel evaluation methodologies.